Local LLM Docker RAG App with Image Generation. An advanced guide to make and host your own AI tools.

NOTE: This guide is a work in progress, if you are reading this it means this guide is not finished yet.

Table of content

- Introduction

- Docker

- API (Python) + LLM

- RAG

- Laravel (PHP)

- Bringing it all together

Introduction

This guide will be lengthy and in depth about how to build your own local webpage where you can prompt a local LLM to generate AI images for social media content with a description based on your knowledge base. This can theoretically done for companies, for yourself, or adjusted to build another kind of app based on it. Your are free to do with this knowledge as you please.

The intention of this app is to create a full post with image and description with 1 click. This can be the case if all your prompts are preset in a good way. You are very likely to get different results without changing anything, because of the nature of LLMs. Ofcourse you can change the settings and prompts to adjust the results and get even more dynamic results.

In my example you can notice I did not mention Microsoft, but because my vector database on the active account contains the Microsoft wiki, it will output relevant Microsoft information and the end result is a picture of their product like requested and a description of the visible product, in this case a laptop. Notice my prompts are minimal to show you how it works.

Keep in mind this app was put together in a short amount of time, so the code is not totally cleaned, but I will try my best while writing this blog post to clean it up a bit.

The code and all tools have been made last year, so it might have some outdated methods, but the point of this post is to guide you and give you ideas about how to build such applications on your own.

Here is a screenshot of the current end result.

As you can see there are some functionalities that come with this app.

You can use multiple accounts and link different social media accounts to these, this makes it easy to switch if you have multiple brands or accounts.

At the moment only positing on Twitter works, but this could be expanded to use the API of facebook, instagram, etc.

You can create 4 prompts.

- The first prompt will use the RAG functionality so the vector database Milvus will get related data to the prompt and the LLM will then create the result.

- The second prompt will prompt on the last result, giving you the prompt that will be used for the image. Read this if you did not get that: the result of the prompt is at the same time the prompt for the image.

- The third prompt is actually just the filename of the image, but you can change this to an URL even and it will show the picture from the URL.This makes it possible to use any other picture.

- The last prompt will be used to create the image description.

All of these prompts and also the results can be changed and will be saved using AJAX in the database after leaving the input field.

Let’s talk about some of the buttons.

- The first button is to switch accounts, on which you can have different social media channels.

- Then there is a button to add your Twitter account.

- There is a button to regenerate the image with the same prompt (result).

- There is a button to 4x upscale the image, you can also click on the image to get a fullscreen preview, which you can click on again to zoom on it, this helps to see every detail, since we know that AI image generation can give tiny abstractions that are sometimes hard to spot. TIP: always look close to the hands and fingers.

- At the bottom you can generate a new post with all the current prompts.

- At last you can publish the post on the selected social media channels.

So now that we have covered the basic functionality of the app, it is time to dive into building the app.

Docker

We are going to build everything on docker and also automate the process of starting and stopping all the containers. But before we can talk about containers we are going to need some images.

Go ahead and download these images:

- nvidia/cuda:12.3.1-devel-ubuntu22.04 (https://hub.docker.com/layers/nvidia/cuda/12.3.1-devel-ubuntu22.04/images/sha256-af963432696b5f170a64ceddcac9fc4df01292f0f35d52e24bc0750ec169332d)

- vllm/vllm-openai:v0.4.1 (https://github.com/vllm-project/vllm)

- bitnami/laravel:latest (https://hub.docker.com/r/bitnami/laravel/)

Download these files from github:

- milvusdb/milvus:v2.4.1 (https://github.com/milvus-io/milvus/blob/master/deployments/docker/gpu/standalone/docker-compose.yml)

- yanwk/comfyui-boot:latest (https://github.com/YanWenKun/ComfyUI-Docker/blob/main/cu121/docker-compose.yml)

The nvidia image will be used for our backend. This is where the whole backend logic including the API for the LLM will run.

Milvus will be used as our vector database where we save our knowledge base, how this works will be explained later.

VLLM is where our LLM will actually run, we use VLLM because we can run multiple clients at the same time and the speed is actually pretty good. It will also handle important tasks for us, so we don’t need to think about it.

Nvidia LLM Backend

Now open command prompt and start nvidia with this command:

docker run --name llm-backend --gpus all -it -p 8000:8000 -p 8001:8001 -v C:\path-to-knowledgebase:/home c29823436e209ada1871122307765d4fc98aada073050a366a324340de818Change the path to a local folder where you will be storing all scripts and knowledgebase for this container and change the last long number with the actual image number which you find in docker.

If you choose not to create a volume you can also use this command to copy over files like scripts and knowledge base, just be sure to use it frequently even after changes:

docker cp C:\path-to-knowledgebase llm-backend:homeThen in CMD or in exec tab in the container itself run these commands:

apt-get update

apt-get install python3

apt-get install pip

pip install torch

pip install llama_index

pip install transformers

CMAKE_ARGS="-DLLAMA_CUBLAS=on" pip install llama-cpp-python --upgrade --force-reinstall --no-cache-dir

pip install pymilvus

pip install pypdf

pip install llama-index-embeddings-huggingface

pip install llama-index-vector-stores-milvus

pip install -U llama-index-core llama-index-llms-openai llama-index-embeddings-openai

pip install llama-index-llms-openai-like

pip install llama-index-postprocessor-colbert-rerankNote that we will be using LlamaIndex (github) as framework for our backend logic to communicate with the LLM which runs on VLLM.

Also we will be installing pymilvus to create our vector database from this container.

VLLM

If you have downloaaded the VLLM image we can now run it with the following command:

docker run --name vllm-llama3-8b --gpus all -it -p 8000:8000 a7c55d342348072d49e1df0c510684f435856f7d701e5c2c1a43437c78 --model NousResearch/Meta-Llama-3-8B-InstructAgain change the long string with the actual Docker image.

In this case i have used Llama3 8B Instruct, but you can use any other VLLM supported LLMs, click here to see which ones are available.

Now we have installed our VLLM server, this will handle pretty much everything and it is this simple to install.

Check out their documentation, because there are some handy arguments for if the VRAM of your GPU is low and if the model can’t completely run on your GPU. Here are some interesting commands:

--gpu-memory-utilization 0.9

--max-model-len 7168Laravel with Bitnami

Laravel is a PHP framework which we will be using to run our website logic, both frontend and backend. I won’t go too indepth about Laravel and you could probably also use Node.JS or any other framework, but I choose Laravel because I am familiar with it, so the website in this guide will be based on that.

Bitnami is a complete package that will have all the tools like database, apache etc where Laravel will run on. This allows us to run Laravel very fast without needing to configure much.

docker network create laravel-network

docker run -d --name mariadb -p 33066:3306 --env ALLOW_EMPTY_PASSWORD=yes --env MARIADB_USER=notroot --env MARIADB_DATABASE=chatai --network laravel-network --volume mariadb_data:/bitnami/mariadb bitnami/mariadb:latest

docker run -d --name ChatAILaravel -p 8010:8000 --env DB_HOST=mariadb --env DB_PORT=3306 --env DB_USERNAME=notroot --env DB_DATABASE=chatai --network laravel-network --volume ${PWD}/ChatAILaravel:/app bitnami/laravel:latestFirst we created a docker network, which we will be using to make communication between containers possible. Then we created the MariaDB database and then we created the Laravel container.

You can find your Laravel website here: localhost:8010

Milvus

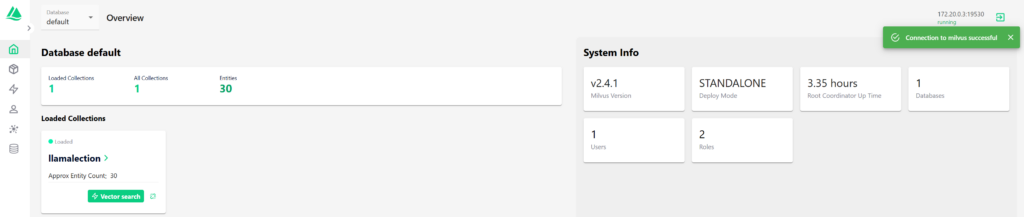

Milvus is our vector database. This is where our knowledge base will be stored. This makes it possible to send a prompt to this database to retrieve relevant information to then feed to our LLM.

You can kinda see this as a search engine, but the power is in the way you can configure it and the speed in which it retrieves data. I will go a little more in depth about it later.

To create our Docker vector database with Milvus follow these steps:

Go to the folder where you have put the .yaml file. Then run:

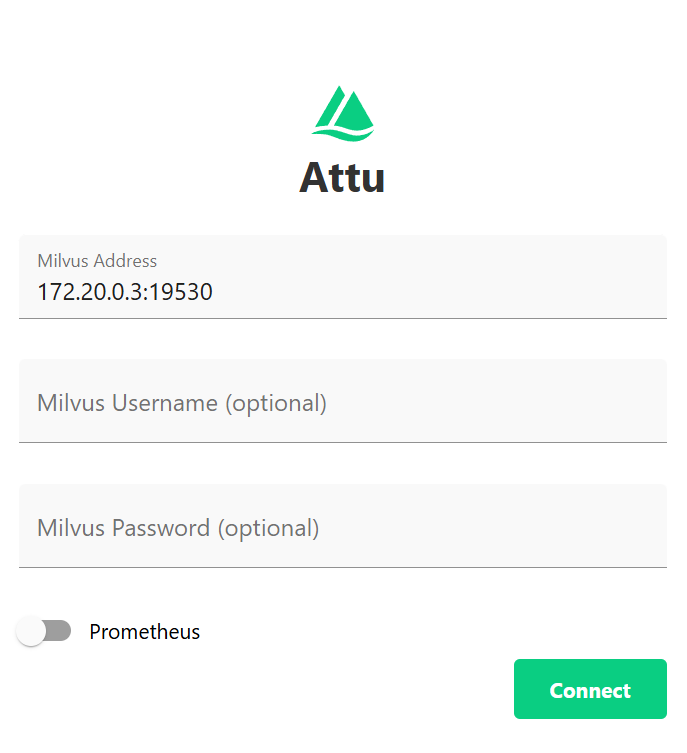

docker compose up -dOptional: you can install this as a web ui for your vector database:

docker run -p 8003:3000 -e MILVUS_URL=172.20.0.4:19530 zilliz/attu:v2.3.1You can now find the Attu web ui at: localhost:8003

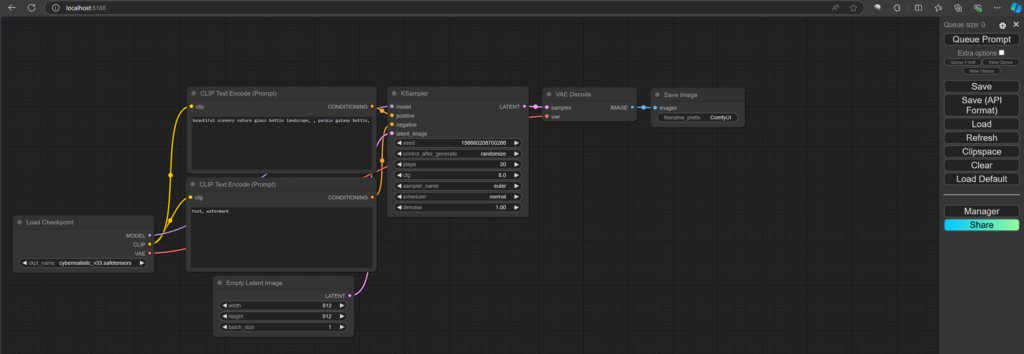

ComfyUI

ComfyUI is a very nice open source tool that makes use of Stable Diffusion models and makes it very user friendly to create workflows for AI, mostly for image generation, but I have also seen people use it for LLM workflows. In this guide we will use it for image generation only. So you can see it as a local LLM for image generation.

Run the ComfyUI .yaml like so, but be sure to check out their github, because I noticed it has changed since I have used it and there are also multiple CUDA versions, be sure to use the one with your CUDA version.

The command below shows how to run the command, make sure to change the path (storage):

docker run -it \

--name comfyui \

--gpus all \

-p 8188:8188 \

-v "$(pwd)"/storage:/home/runner \

-e CLI_ARGS="" \

yanwk/comfyui-boot:latestThis will install the docker for ComfyUI which will be available at localhost:8188.

Docker networks

Now we will connect all our containers.

docker network connect laravel-network llm-backend

docker network connect laravel-network mariadb

docker network connect laravel-network ChatAILaravel

docker network connect laravel-network comfyui

docker network create llm-network

docker network connect llm-network llm-backend

docker network connect llm-network milvus-standalone

docker network connect llm-network vllm-llama3-8b

docker network connect llm-network angry_kapitsaScripts

Now we want our Docker containers to start and stop with little effort. That is why I created 2 .bat scripts.

Start.bat:

docker start llm-backend

docker start milvus-standalone

docker start milvus-minio

docker start milvus-etcd

docker start vllm-llama3-8b

docker start mariadb

docker start ChatAILaravel

docker start comfyui

docker start file-chown

docker exec -d llm-backend /bin/bash -c "cd /home/code/ && uvicorn vllm-api-chatai:app --host 0.0.0.0 --port 8001"

start "" "http://localhost:8188"

start "" "http://localhost:8010/posts"

@echo off

echo Running Comfyui hotfolder. Don't close this window.

python "%~dp0hotfolder.py"

pauseStop.bat:

powershell.exe -Command "& {docker stop $(docker ps -q)}"Now the start script might look a little complex, but it starts all the Docker containers and runs the API which we will code later on.

Then it starts ComfyUI and Laravel, these are optional.

At the end it will run a hotfolder script, which will take care of new images by moving them to the Laravel public folder.

So if it doesn’t make sense yet, it will soon.

The stop script uses powershell to stop all Docker containers.

API (Python) + LLM

Now we are going to code and configure the API so we can actually use the LLM and communicate between the containers to do the stuff we need to do.

So let’s dive straight into it with the first big piece of code. This will be our API that handles the prompts from our webpage and feed it to VLLM to get the results.

Put the following file in the llm-backend folder under a subfolder called code. so C:\path-to\llm-backend\code.

vllm-api-chatai.py

from llama_index.embeddings.huggingface import HuggingFaceEmbedding

from llama_index.core import ServiceContext,StorageContext,VectorStoreIndex,PromptTemplate #v10

from llama_index.vector_stores.milvus import MilvusVectorStore #v10

model = 'NousResearch/Meta-Llama-3-8B-Instruct'

openai_api_base = "http://172.20.0.4:8000/v1"

from llama_index.llms.openai_like import OpenAILike

llm = OpenAILike(

model=model,

api_base=openai_api_base,

api_key="fake",

api_type="fake",

max_tokens=300,

temperature=0.1,

is_chat_model=True,

query_wrapper_prompt=PromptTemplate("As an expert Q&A system answer the question based on the given context.")

)

vector_store = MilvusVectorStore(

uri="http://172.20.0.3:19530",

dim=1024,

collection_name = "llamalection",

)

MEM_PROMPT = ''

CHAT_HISTORY = []

QUESTION_COUNT = 0

embed_model_name = 'BAAI/bge-m3'

embed_model = HuggingFaceEmbedding(

model_name=embed_model_name,

device='cuda',

normalize='True'

)

service_context = ServiceContext.from_defaults(

llm=llm,

embed_model=embed_model,

)

storage_context = StorageContext.from_defaults(vector_store=vector_store)

index = VectorStoreIndex.from_vector_store(vector_store, service_context=service_context, storage_context=storage_context)

#from llama_index.memory import ChatMemoryBuffer #old version

from llama_index.core.memory import ChatMemoryBuffer

memory = ChatMemoryBuffer.from_defaults(token_limit=2000)

from llama_index.postprocessor.colbert_rerank import ColbertRerank

colbert_reranker = ColbertRerank(

top_n=5,

model="colbert-ir/colbertv2.0",

tokenizer="colbert-ir/colbertv2.0",

keep_retrieval_score=True,

)

from llama_index.core.postprocessor import SimilarityPostprocessor

chat_engine = index.as_chat_engine(

node_postprocessors=[

colbert_reranker,

SimilarityPostprocessor(similarity_cutoff=0.7)

],

chat_mode="context",

memory=memory,

system_prompt=(

"You are an expert Q&A system that is trusted around the world. Answer questions based on the given context."

),

)

# Import the libraries

from fastapi import FastAPI, Request

from transformers import pipeline

from pydantic import BaseModel

# Create a FastAPI app

app = FastAPI()

from typing import Optional, Dict

import json

# Create a class for the input data

class InputData(BaseModel):

prompt: str

comfyPrompt: Optional[str] = None

descriptionPrompt: Optional[str] = None

# Create a class for the output data

class OutputData(BaseModel):

response: str

# Create a route for the web application

@app.post("/generate", response_model=OutputData)

def generate(request: Request, input_data: InputData):

chat_engine.reset()

# Get the prompt from the input data

prompt = input_data.prompt

comfyPrompt = input_data.comfyPrompt

descriptionPrompt = input_data.descriptionPrompt

print("prompt: "+str(prompt))

print("comfyPrompt: "+str(comfyPrompt))

print("descriptionPrompt: "+str(descriptionPrompt))

responses = {}

question = prompt

response_text = chat_engine.chat(question)

responses['prompt'] = response_text.response.strip()

if comfyPrompt:

question = comfyPrompt

response_text = chat_engine.chat(question)

responses['comfyPrompt'] = response_text.response.strip()

if descriptionPrompt:

question = descriptionPrompt

response_text = chat_engine.chat(question)

responses['descriptionPrompt'] = response_text.response.strip().replace('"', '')

chat_engine.reset()

responses = json.dumps(responses)

print(responses)

# Return the response as output data

return OutputData(response=responses)This script when run will create a OpenAILike FastAPI interface with the LlamaIndex framework.

That was a mouthfull.

Basicly this will make communication between the containers work, check out their documentation if you want to learn more.

You can change the models, you can change the engine, you can change the way it works to meet whatever you want to build.

This part is highly customizable.

Be sure to use the correct Docker IP addresses here, but if you use the start script from earlier, you should be fine, since Docker creates IP addresses based on the order you start the containers.

RAG

Now the API has been set we need to feed our Milvus vector database with our knowledge base.

RAG stands for Retrieval Augmented Generation and what it does is retrieving relevant data from our vector database.

In my example at the top of this page I asked it to give me data about products and it gave me the Microsoft products that I fed it.

I am using 1 script which we will put in the same code folder as the previous script.

Milvus_docs.py

import arxiv

from PyPDF2 import PdfReader

CHUNK_SIZE = 1024

CHUNK_OVERLAP = 100

import os

path = "/home/knowledgebase/"

pdf_files = [file for file in os.listdir(path) if file.lower().endswith('.pdf')]

doc = []

for file in pdf_files:

print(path+file)

from llama_index.core import Document

reader = PdfReader(path+file)

for idx, page in enumerate(reader.pages):

doc.append(Document(text=page.extract_text(),

metadata={

'source': f'{file}',

'page': f'{idx+1}',

'link':f'{file}'

},

excluded_llm_metadata_keys=['link'],

excluded_embed_metadata_keys=['source', 'page', 'link']

))

print(f'Number of pages {len(doc)}')

from llama_index.core.node_parser import SimpleNodeParser

parser = SimpleNodeParser.from_defaults(include_metadata=True, chunk_size=CHUNK_SIZE, chunk_overlap=CHUNK_OVERLAP)

nodes = parser.get_nodes_from_documents(doc)

print(f'Parsed the {len(doc)} pages into {len(nodes)} nodes')

from llama_index.core import ServiceContext,StorageContext,VectorStoreIndex,PromptTemplate

from llama_index.vector_stores.milvus import MilvusVectorStore

import torch

# Detect hardware acceleration device

if torch.cuda.is_available():

device = 'cuda'

elif torch.backends.mps.is_available():

device = 'mps'

else:

device = 'cpu'

print(f'Using device: {device}')

from llama_index.embeddings.huggingface import HuggingFaceEmbedding

embed_model_name = 'BAAI/bge-m3'

# Import embedding model from HuggingFace

embed_model = HuggingFaceEmbedding(

model_name=embed_model_name,

device = device,

normalize='True',

)

vector_store = MilvusVectorStore(

uri="http://172.20.0.3:19530",

overwrite=True,

dim=1024,

collection_name="llamalection",

)

storage_context = StorageContext.from_defaults(vector_store=vector_store)

service_context = ServiceContext.from_defaults(embed_model=embed_model,

llm = None, # We will set the LLM when we open the DB

chunk_size=CHUNK_SIZE,

chunk_overlap=CHUNK_OVERLAP

)

vector_store_index = VectorStoreIndex(nodes=nodes,

storage_context=storage_context,

service_context=service_context,

show_progress=True)

index = VectorStoreIndex.from_vector_store(vector_store, storage_context=storage_context)

index.storage_context.persist()

print('Completed')Now you can create a subfolder in the main folder named knowledgebase so you have 2 folders now: code and knowledgebase.

Inside knowledgebase you put all your PDFs and then run the file from llm-backend exec tab:

python3 /home/code/milvus_docs.pyYour files should now have been chunked and added to the vector database. You should see it added succesfully to Attu: localhost:8003

You can play with the settings to optimize your vectors. You can find tutorials how to do this on the world wide web.

Now our API should have access to our RAG knowledge base.

Laravel (PHP)

Now we are going to code most of our logic which will be done in PHP. Here we will use the Laravel framework to control every aspect of our processes.

This will be our front-end and back-end. It will communicate with our Milvus vector database LLM and with ComfyUI Stable Diffusion to get the text and images.

It will be nice if you already have some MVC (Model View Controller) framework experience, because it will make a lot more sense, because I will just blast through this with all the scripts.

I will assume you will already have installed Laravel and are working from its main directory.

Create app\Http\Controllers\AccountController.php

<?php

namespace App\Http\Controllers;

use Illuminate\Http\Request;

use App\Models\Account;

use Illuminate\Support\Facades\Auth;

class AccountController extends Controller

{

public function switch(Request $request)

{

$request->validate([

'account_id' => 'required|exists:accounts,id',

]);

$userId = Auth::user()->id;

$account = Account::find($request->account_id);

if ($account->user_id !== $userId) {

return redirect()->back()->with('error', 'You do not have permission to switch to this account.');

}

$request->session()->put('selectedAccountId', $account->id);

return redirect()->back()

->with('success', 'Account switched successfully.');

}

public function sessionAccountId($session)

{

if (!empty(session($session))) {

return session($session);

}

return session($session, Auth::user()->accounts->first()->id);

}

}

Create app\Http\Controllers\ComfyController.php

<?php

namespace App\Http\Controllers;

use Illuminate\Http\Request;

use Illuminate\Support\Facades\Auth;

use App\Services\ComfyService;

class ComfyController extends Controller

{

protected $comfyService;

public function __construct(ComfyService $comfyService)

{

$this->comfyService = $comfyService;

}

public function promptApi($promptArray)

{

// Call the API

$response = $this->comfyService->queuePrompt($promptArray);

// Handle the response

if (isset($response['error'])) {

return response()->json(['error' => $response['error']], $response['status']);

} else {

return response()->json($response);

}

}

}Create app\Http\Controllers\LlmController.php

<?php

namespace App\Http\Controllers;

use Illuminate\Support\Facades\Http;

use Exception;

use Illuminate\Http\Request;

use App\Models\Post;

use App\Models\Account;

use App\Http\Controllers\ComfyController;

use App\Http\Controllers\AccountController;

use Illuminate\Support\Str;

class LlmController extends Controller

{

public function generate(Request $request, $extra) {

$AccountController = app()->make(AccountController::class);

$accountId = $AccountController->sessionAccountId('selectedAccountId');

$account = Account::find($accountId);

$postId = $account->posts->last()->id;

$post = Post::where('id', $postId)->first();

if ($extra === 'pic') {

$this->comfy($post);

return redirect()->back()->with('success', 'Generating picture.');

}

$data = [

'prompt' => $post->text->prompt,

'comfyPrompt' => $post->pic->comfyPrompt,

'descriptionPrompt' => $post->text->descriptionPrompt,

];

return $this->llm($post, $data);

}

public function llm($post, $data) {

$url = env('LLM_GENERATE_URL');

try {

$response = Http::timeout(60)->asJson()->post($url, $data); // Request vLLM

} catch (Exception $e) {

return response()->json(['error' => $e->getMessage()]);

}

if ($response->successful()) {

$serverResponse = $response->json()['response'];

$responses = json_decode($serverResponse);

foreach ($responses as $response => $value) {

// $post = Post::where('id', $postId)->first();

if($response == 'prompt') {

$model = $post->text;

$post->text->result = $value;

}

if($response == 'comfyPrompt') {

$model = $post->pic;

$post->pic->comfyResult = $value;

}

if($response == 'descriptionPrompt') {

$model = $post->text;

$post->text->descriptionResult = $value;

}

$model->save();

}

$this->comfy($post);

return redirect()->back()->with('success', 'Generating post.');

} else {

return response()->json(['error' => 'Request to the vLLM server failed. ' . $response->body()], $response->status());

}

}

public function comfy($post) {

$comfyController = app()->make(ComfyController::class);

$jsonFile = 'resources/json/workflow.json';

$model = $post->pic;

$date = date('Y-m-d');

$randomNumber = Str::random(10);

$fileExt = "_00001_.png";

$fileName = $date . '-' . $randomNumber;

$post->pic->location = $fileName.$fileExt;

$model->save();

if ($jsonFile == '') {

$jsonFile = 'resources/json/generateExample.json';

}

$promptText = json_decode(file_get_contents(base_path($jsonFile)), true);

// Modify the prompt as needed

$prompt = $post->pic->comfyResult;

$promptText["2"]["inputs"]["text"] = $prompt;

$promptText["60"]["inputs"]["filename_prefix"] = $fileName;

$promptText["17"]["inputs"]["width"] = 512;

$promptText["17"]["inputs"]["height"] = 768;

$promptText["36"]["inputs"]["seed"] = rand(0, 999999999999999);

$promptArray = [

'prompt' => $promptText

];

return $comfyController->promptApi($promptArray);

}

public function upscale(Request $request) {

$comfyController = app()->make(ComfyController::class);

$AccountController = app()->make(AccountController::class);

$accountId = $AccountController->sessionAccountId('selectedAccountId');

$account = Account::find($accountId);

$postId = $account->posts->last()->id;

$post = Post::where('id', $postId)->first();

$jsonFile = 'resources/json/upscaler.json';

$promptText = json_decode(file_get_contents(base_path($jsonFile)), true);

$file = $post->pic->location;

$date = substr($file, 0, 10);

$randomNumber = substr($file, 11, 10);

$fileExt = "_00001_.png"; // Comfyui adds this after saving

$fileName = $date . '-' . $randomNumber;

$fileNameNew = $date . '-' . $randomNumber."_upscaled";

if (substr($file, 21, 9) == "_upscaled") { // check if 11th character is "2" to check if it is upscaled already

return redirect()->back()->with('error', 'Picture is already upscaled.');

}

$prompt = $post->pic->comfyResult;

$promptText["4"]["inputs"]["text"] = $prompt;

$promptText["2"]["inputs"]["image"] = $fileName.$fileExt;

$promptText["3"]["inputs"]["tile_width"] = 1024;

$promptText["3"]["inputs"]["tile_height"] = 1536;

$promptText["3"]["inputs"]["seed"] = rand(0, 999999999999999);

$promptText["25"]["inputs"]["seed"] = rand(0, 999999999999999);

$promptText["17"]["inputs"]["filename_prefix"] = $fileNameNew;

$promptArray = [

'prompt' => $promptText

];

$returnData = $comfyController->promptApi($promptArray);

$returnContent = json_decode($returnData->getContent());

if (isset($returnContent->error)) {

return redirect()->back()->with('error', 'Error upscaling picture.');

}

$model = $post->pic;

$post->pic->location = $fileNameNew.$fileExt;

$model->save();

return redirect()->back()->with('success', 'Upscaling picture.');

}

}Create app\Http\Controllers\PostController.php

<?php

namespace App\Http\Controllers;

use App\Models\Post;

use App\Models\Text;

use App\Models\Pic;

use App\Models\Account;

use App\Models\Llm;

use App\Models\Sm;

use App\Models\User;

use Illuminate\Http\Request;

use Carbon\Carbon;

use Illuminate\Support\Facades\Auth;

use Illuminate\Support\Facades\Storage;

use App\Http\Controllers\TweetController;

use App\Http\Controllers\AccountController;

class PostController extends Controller

{

public function index(Request $request)

{

if ($request->has('success')) {

session()->flash('success', $request->input('success'));

}

Auth::loginUsingId(1); // CHANGE

$user = Auth::user();

$userId = $user->id;

$AccountController = app()->make(AccountController::class);

$accountId = $AccountController->sessionAccountId('selectedAccountId');

$posts = Post::whereHas('account', function ($query) use ($userId, $accountId) {

$query->whereHas('user', function ($query) use ($userId) {

$query->where('id', $userId);

})->where('id', $accountId);

})->with('text', 'pic', 'account.llms', 'account.sms')->get();

return view('posts.index', compact('posts'));

}

public function publish(Request $request, $postId)

{

// Get the selected checkboxes

$selectedSms = $request->input('sm');

$selectedSmIds = $request->input('sm_id');

if ($selectedSms) {

$AccountController = app()->make(AccountController::class);

$accountId = $AccountController->sessionAccountId('selectedAccountId');

$account = Account::find($accountId);

// Process the selected social media accounts

foreach ($selectedSmIds as $index => $sm) {

$smDB = Sm::find($sm);

$smName = $smDB->name;

if ($smDB->account_id !== $account->id) {

return redirect()->back()->with('error', 'You do not have permission to switch to this account.');

}

// Check which social media needs to be published to

if($smName === 'twitter'){

$tweetController = new TweetController;

$tweetController->sendTweet($postId);

}

}

return redirect()->back()->with('success', 'Post published on social media successfully.');

}

return redirect()->back()->with('error', 'Post was not published. No social media selected.');

}

public function create()

{

// Check if there are any accounts

$AccountController = app()->make(AccountController::class);

$accountId = $AccountController->sessionAccountId('selectedAccountId');

$account = Account::find($accountId);

$userId = Auth::user()->id;

if ($account->user_id !== $userId) {

return redirect()->back()->with('error', 'You do not have permission to create this post.');

}

if (!$account) {

// If there are no accounts, create a new one

$account = new Account;

$account->name = 'name';

$account->save(); // Assuming the account table has no required fields other than id

}

// Create a new text record

$text = new Text;

$text->prompt = 'Prompt'; // Replace with actual logic for generating text prompts

$text->account_id = $account->id;

$text->save();

// Create a new pic record

$pic = new Pic;

$pic->location = 'Location'; // Replace with actual logic for generating picture locations

$pic->account_id = $account->id;

$pic->save();

// Create a new llm record

$llm = new Llm;

$llm->name ='name';

$llm->url = 'url';

$llm->model = 'model';

$llm->tokens = 1000;

$llm->temp = 0.1;

$llm->systemprompt = 'systemprompt';

$llm->type = 'type';

$llm->account_id = $account->id;

$llm->save();

// Create a new post record with the newly created text and pic

$post = new Post;

$post->text_id = $text->id;

$post->pic_id = $pic->id;

$post->account_id = $account->id;

$post->save();

return redirect()->route('posts.index')->with('success', 'Post created successfully.');

}

public function updateField(Request $request, $postId)

{

$post = Post::findOrFail($postId);

$model = $request->input('model'); // The model to update (e.g., 'text', 'pic', 'account.llms')

$field = $request->input('field'); // The field to update within the model

$value = $request->input('value'); // The new value for the field

// Use dot notation to access nested relationships

$fields = explode('.', $model);

$relation = array_shift($fields);

if ($model === 'post' && $field === 'time') {

$post->time = Carbon::parse($value)->toDateTimeString();

$post->save();

return response()->json(['success' => true]);

}

// Check if the relation is directly accessible on the Post model

if (method_exists($post, $relation)) {

$relatedModel = $post->$relation;

} else {

// If not, traverse the relationship chain

foreach ($fields as $field) {

if ($relatedModel && method_exists($relatedModel, $field)) {

$relatedModel = $relatedModel->$field;

} else {

// Handle the case where the relationship chain is invalid

return response()->json(['success' => false, 'message' => 'Invalid relationship chain']);

}

}

}

// Update the field with the new value on the related model

$relatedModel->$field = $value;

$relatedModel->save();

return response()->json(['success' => true]);

}

public function pictureFile($accountid, $filename)

{

$userId = Auth::user()->id;

$account = Account::where('user_id', $userId)->first();

$picture = Pic::where('account_id', $accountid)->where('location', $filename)->first();

if ($userId === $account->user_id) {

$path = env('PIC_PATH') . $picture->location;

if (Storage::disk('local')->exists($path)) {

return response()->file(Storage::disk('local')->path($path));

}

}

abort(404);

}

public function checkFile($postId) {

$post = Post::find($postId);

if (empty($post->pic)) {

return response()->json(['pictureExists' => false]);

}

$path = env('PIC_PATH') . $post->pic->location;

$pictureExists = Storage::disk('local')->exists($path);

return response()->json(['pictureExists' => $pictureExists]);

}

}Create app\Http\Controllers\SMController.php

<?php

namespace App\Http\Controllers;

use Laravel\Socialite\Facades\Socialite;

use Illuminate\Support\Facades\Auth;

use App\Models\Sm;

use App\Models\Account;

use Illuminate\Http\Request;

class SMController extends Controller

{

public function redirectToProvider($provider)

{

return Socialite::driver($provider)->redirect();

}

public function callback($service) {

$user = Socialite::with ($service)->user();

$accountId = session('selectedAccountId');

$sm = SM::updateOrCreate(

['name' => $service,

'account_id' => $accountId,], // The attributes to check for existence

[

'account_id' => $accountId,

'data' => json_encode($user), // Store the entire user object as JSON

]

);

return redirect()->route('sms.social.index', ['id' => $sm->id])->with('user', $user)->with('service', $service);

return view ( 'sms.index' )->withDetails ( $user )->withService ( $service );

}

public function index(Request $request, $id)

{

$sm = SM::findOrFail($id);

$userData = json_decode($sm->data, true);

if ($userData==null){return 'userData is null, might be json fail in sm data';}

$service = $sm->name;

$id = $sm->id;

return view('sms.social', compact('userData', 'service', 'id'));

}

public function list(Request $request)

{

return view('sms.list');

}

public function delete(Request $request)

{

$selectedSms = $request->input('sm');

$selectedSmIds = $request->input('sm_id');

// Check if any checkboxes were selected

if ($selectedSms) {

$account = Account::find(session('selectedAccountId'));

// Process the selected social media accounts

foreach ($selectedSms as $index => $smName) {

// Get the ID of the current SM

$smId = $selectedSmIds[$index];

// Check if we are allowed to delete

$sm = Sm::find($smId);

if ($sm->account_id !== $account->id) {

return redirect()->back()->with('error', 'You do not have permission to switch to this account.');

}

// Perform the delete operation for each selected SM

$sm->delete();

}

return redirect()->back()->with('success', 'You have successfully deleted a social media entry.');

}

return redirect()->back()->with('error', 'Nothing has been deleted. No social media selected.');

}

}Create app\Http\Controllers\TweetController.php

<?php

namespace App\Http\Controllers;

use Abraham\TwitterOAuth\TwitterOAuth;

use App\Models\Sm;

use App\Models\Post;

use Illuminate\Support\Facades\Storage;

class TweetController extends Controller

{

public function sendTweet($postId){

$post = Post::where('id', $postId)->first();

$accountId = $post->account_id;

$SocialMedia = 'twitter';

$SmRow = Sm::where('account_id', $accountId)->where('name', $SocialMedia)->first();

if(empty($SmRow->data)){

return redirect()->back()->with('error', 'Error: Cannot find social media account.');

echo 'Error: Cannot find social media account.';

}

$SmDataJson = json_decode($SmRow->data);

$CONSUMER_KEY = env('TWITTER_CLIENT_ID');

$CONSUMER_SECRET = env('TWITTER_CLIENT_SECRET');

$ACCESS_TOKEN = $SmDataJson->token;

$ACCESS_TOKEN_SECRET = $SmDataJson->tokenSecret;

$connection = new TwitterOAuth($CONSUMER_KEY, $CONSUMER_SECRET, $ACCESS_TOKEN, $ACCESS_TOKEN_SECRET);

$connection->setApiVersion('2');

$path = env('PIC_PATH').$post->pic->location;

$image = [];

if (Storage::disk('local')->exists($path)) {

$FullPath = Storage::disk('local')->path($path);

$connection->setApiVersion('1.1');

$media = $connection->upload('media/upload', ['media' => $FullPath]);

array_push($image, $media->media_id_string);

$connection->setApiVersion('2');

}

$data = [

'text' => substr($post->text->descriptionResult, 0, env('TWITTER_CHAR_LIMIT')),

'media'=> ['media_ids' => $image]

];

$result = $connection->post("tweets", $data);

if ($connection->getLastHttpCode() == 200 || 201) {

return redirect()->back()->with('success', 'Tweet posted successfully.');

echo "Tweet posted successfully.";

} elseif ($connection->getLastHttpCode() == 403) {

return redirect()->back()->with('error', 'Error: ' . $result->detail);

echo 'Error: ' . $result->detail;

} else {

return redirect()->back()->with('error', 'Error: ' . $result->errors[0]->message);

echo 'Error: ' . $result->errors[0]->message;

}

}

}

Create app\Http\View\Composers\SocialMediaComposer.php

<?php

namespace App\Http\View\Composers;

use Illuminate\View\View;

use App\Models\SM;

use Illuminate\Support\Facades\Auth;

use App\Models\Account;

use App\Models\Post;

use App\Http\Controllers\AccountController;

class SocialMediaComposer

{

/**

* Bind data to the view.

*

* @param \Illuminate\View\View $view

* @return void

*/

public function compose(View $view)

{

if ($view->getName() === 'auth.login' || empty(Auth::user())) {

return;

}

$user = Auth::user();

$accounts = Account::where('user_id', $user->id)->get() ?? null;

$AccountController = app()->make(AccountController::class);

$selectedAccountId = $AccountController->sessionAccountId('selectedAccountId');

$sms = SM::where('account_id', $selectedAccountId)->get();

$view->with('accounts', $accounts);

$view->with('selectedAccountId', $selectedAccountId);

$view->with('sms', $sms);

}

}

Create resources\views\posts\index.blade.php

@extends('layouts.app')

@section('actions')

<div class="col-sm-2">

<h1>Actions</h1>

<form action="{{ route('posts.create') }}" method="POST">

@csrf

<!-- Your form fields here -->

<button type="submit" class="btn btn-primary col-sm-12">Create New Post</button>

</form>

@endsection

@section('content')

<div class="container-fluid">

<h1>Posts</h1>

@if (session('success'))

<div class="alert alert-success alert-dismissible fade show">

{{ session('success') }}

<button type="button" class="close" data-dismiss="alert" aria-label="Close">

<span aria-hidden="true">×</span>

</button>

</div>

@endif

@if (session('error'))

<div class="alert alert-danger alert-dismissible fade show">

{{ session('error') }}

<button type="button" class="close" data-dismiss="alert" aria-label="Close">

<span aria-hidden="true">×</span>

</button>

</div>

@endif

<table class="table table-striped table-bordered">

<thead>

<tr><tr>

<tr><tr>

<tr><tr>

<tr><tr>

<tr><tr>

<tr><tr>

</thead>

<tbody>

@foreach ($posts as $post)

@if ($selectedAccountId == $post->account->id)

<tr>

<td>Description</td>

<td>Prompt</td>

<td>Result</td>

<td>Action</td>

</tr>

<tr>

<td class="col-sm-1">Document</td>

<td class="col-sm-3" data-post-id="{{ $post->id }}" data-model="text" data-field="prompt" contenteditable="true">{{ $post->text->prompt ?? 'No text prompt' }}</td>

<td class="col-sm-7 prompt-result" data-post-id="{{ $post->id }}" data-model="text" data-field="result" contenteditable="true">{{ $post->text->result ?? 'No text result' }}</td>

<td class="col-sm-1"></td>

</tr>

<tr>

<td>Image</td>

<td data-post-id="{{ $post->id }}" data-model="pic" data-field="comfyPrompt" contenteditable="true">{{ $post->pic->comfyPrompt ?? 'No image prompt' }}</td>

<td class="prompt-result" data-post-id="{{ $post->id }}" data-model="pic" data-field="comfyResult" contenteditable="true">{{ $post->pic->comfyResult ?? 'No image result' }}</td>

<td><a href="generate/pic" class="btn btn-primary col-sm-12">Generate Picture</a></td>

</tr>

<tr>

<td>Picture</td>

<td data-post-id="{{ $post->id }}" data-model="pic" data-field="location" contenteditable="true">{{ $post->pic->location ?? 'No image' }}</td>

<td class="prompt-result">

<img class="imageLoader{{$post->id}}" src=""

data-account-id="{{ $post->account->id }}"

data-pic-location="{{ $post->pic->location }}"

data-toggle="modal" data-target="#imageModal{{$post->id}}">

<span class="loading loading{{$post->id}}"></span></td>

<!-- Modal -->

<div class="modal fade" id="imageModal{{$post->id}}" tabindex="-1" role="dialog" aria-labelledby="imageModalLabel{{$post->id}}" aria-hidden="true">

<div class="modal-dialog modal-xl" role="document">

<div class="modal-content">

<div class="modal-header">

<h5 class="modal-title" id="imageModalLabel{{$post->id}}"></h5>

<button type="button" class="close" data-dismiss="modal" aria-label="Close">

<span aria-hidden="true">×</span>

</button>

</div>

<div class="modal-body">

<img id="image{{$post->id}}" class="img-fluid zoomable" src="" alt="Image">

</div>

</div>

</div>

</div>

<script src="https://cdnjs.cloudflare.com/ajax/libs/medium-zoom/1.0.6/medium-zoom.min.js"></script>

<script>

$(document).ready(function() {

$('.imageLoader{{$post->id}}').click(function() {

var accountId = $(this).data('account-id');

var picLocation = $(this).data('pic-location');

var imageSrc = 'picture/' + accountId + '/' + picLocation;

$('#image{{$post->id}}').attr('src', imageSrc); // Set the image source

$('#imageModal{{$post->id}}').modal('show'); // Show the modal

});

$('#image{{$post->id}}').on('click', function() {

var $img = $(this);

var isZoomed = $img.hasClass('zoomed');

if (!isZoomed) {

// Save the current scroll position

var scrollTop = $('.modal-body').scrollTop();

var scrollLeft = $('.modal-body').scrollLeft();

// Toggle the zoom class

$img.addClass('zoomed');

// Set the scroll position to maintain the view

$('.modal-body').scrollTop(scrollTop);

$('.modal-body').scrollLeft(scrollLeft);

} else {

$img.removeClass('zoomed');

}

});

});

</script>

<td><a href="{{ route('upscale.llm') }}" class="btn btn-primary col-sm-12">Upscale Picture</a></td>

</tr>

<tr>

<td>Post</td>

<td data-post-id="{{ $post->id }}" data-model="text" data-field="descriptionPrompt" contenteditable="true">{{ $post->text->descriptionPrompt ?? 'No description prompt' }}</td>

<td class="prompt-result" data-post-id="{{ $post->id }}" data-model="text" data-field="descriptionResult" contenteditable="true">{{ $post->text->descriptionResult ?? 'No description result' }}</td>

<td id="charCountContainer">Characters counter loading...</td>

</tr>

<tr>

<td></td>

<td><a href="generate/all" class="btn btn-primary col-sm-12">Generate Post</a><br><br></td>

<td><button type="submit" form="smform" class="btn btn-primary col-sm-12" formaction="{{ route('publish', ['postId' => $post->id]) }}">Publish</button></td>

<td></td>

</tr>

<script>

document.addEventListener('DOMContentLoaded', function() {

checkPictureStatus({{ $post->id }})

});

</script>

@endif

@endforeach

</tbody>

</table>

</div>

@endsection

@section('scripts')

<script>

function checkPictureStatus(postId) {

const imgElement = document.querySelector(`img.imageLoader${postId}`);

const spinnerElement = document.querySelector(`.loading${postId}`);

const checkUrl = `/check-picture/${postId}`;

const accountId = imgElement.dataset.accountId;

const picLocation = imgElement.dataset.picLocation;

const pictureUrl = `/picture/${accountId}/${picLocation}`;

// console.log('checking picture');

spinnerElement.style.display = 'block';

fetch(checkUrl)

.then(response => response.json())

.then(data => {

if (data.pictureExists) {

imgElement.src = pictureUrl;

spinnerElement.style.display = 'none'; // Hide the spinner

// console.log('pic found');

} else {

setTimeout(() => checkPictureStatus(postId), 1000); // Try again in 1 second

// console.log('waiting for pic');

}

})

.catch(error => {

console.error('Error:', error);

spinnerElement.style.display = 'none'; // Hide the spinner

});

}

document.addEventListener('DOMContentLoaded', function() {

var contentEditableElement = document.querySelector('[data-field="descriptionResult"]');

var charCountContainer = document.getElementById('charCountContainer');

function updateCharCount() {

var charCount = contentEditableElement.innerText.length;

var charsLeft = 280 - charCount;

charCountContainer.textContent = charCount+' / 280';

}

contentEditableElement.addEventListener('input', updateCharCount);

updateCharCount();

});

document.addEventListener('DOMContentLoaded', function() {

var editableElements = document.querySelectorAll('[contenteditable="true"]');

editableElements.forEach(function(element) {

element.addEventListener('blur', function() {

var postId = this.dataset.postId;

var model = this.dataset.model;

var field = this.dataset.field;

var value = this.innerText;

updateField(postId, model, field, value);

});

});

});

function updateField(postId, model, field, value) {

$.ajax({

url: '/posts/' + postId + '/update-field',

type: 'PUT',

data: {

_token: '{{ csrf_token() }}',

model: model,

field: field,

value: value

},

success: function(response) {

if (response.success) {

// Optionally, you can show a success message or update the UI

console.log('Field updated successfully.');

} else {

console.error('An error occurred: ' + response.message);

}

},

error: function(xhr, status, error) {

console.error('An error occurred: ' + error);

}

});

}

</script>

@endsection

Create routes\web.php

<?php

use Illuminate\Support\Facades\Route;

Route::get('/', function () {

return view('welcome');

});

Auth::routes();

use App\Http\Controllers\PostController;

Route::get('/test', [PostController::class, 'test'])->name('posts.test')->middleware('auth');

Route::get('/posts', [PostController::class, 'index'])->name('posts.index');

Route::post('/posts', [PostController::class, 'create'])->name('posts.create')->middleware('auth');

Route::put('/posts/{post}/update-field', [PostController::class, 'updateField'])->name('posts.update-field')->middleware('auth');

Route::post('publish/{postId}', [PostController::class, 'publish'])->name('publish')->middleware('auth');

use App\Http\Controllers\SMController;

Route::get('/redirect/{provider}', [SMController::class, 'redirectToProvider'])->name('social.redirect')->middleware('auth');

Route::get('/callback/{provider}', [SMController::class, 'Callback'])->name('social.callback')->middleware('auth');

Route::get('social/{id}', [SMController::class, 'index'])->name('sms.social.index')->middleware('auth');

Route::get('smlist', [SMController::class, 'list'])->name('sms.list')->middleware('auth');

Route::post('sm/delete', [SMController::class, 'delete'])->name('sm.delete')->middleware('auth');

use App\Http\Controllers\AccountController;

Route::post('account/switch', [AccountController::class, 'switch'])->name('account.switch')->middleware('auth');

Route::get('/home', [App\Http\Controllers\HomeController::class, 'index'])->name('home')->middleware('auth');

use App\Http\Controllers\LlmController;

Route::get('generate/{task}', [LlmController::class, 'generate'])->name('generate.llm')->middleware('auth');

Route::get('upscale', [LlmController::class, 'upscale'])->name('upscale.llm')->middleware('auth');

Route::get('/picture/{accountid}/{filename}', [PostController::class, 'pictureFile'])->name('picture.file')->middleware('auth');

use App\Http\Controllers\TweetController;

Route::get('tweet/{postId}', [TweetController::class, 'sendTweet'])->name('tweet')->middleware('auth');

Route::get('/check-picture/{postId}', [PostController::class, 'checkFile'])->name('check.file')->middleware('auth');

There are a few more scripts, like the migration files, but these are the most important and I will make my Github available soon where you can just download everything from.

Bringing it all together

Now there are still some pieces missing like a hotfolder and how to get the Stable Diffusion workflow to work with this script.

I will add these pieces later